This is Zhenyi He, a Ph.D. student in NYU Future Reality Lab(FRL) advised by Professor Ken Perlin. Currently, I am working on Virtual Reality (VR) and Augmented Reality(AR) as well as the Human-Computer Interaction (HCI) area. See my CV and research statement.

Before I joined FRL, I was in the Digital Art Lab at Shanghai Jiao Tong University and was advised by Professor Xubo Yang.

Also, I had collaboration with Professor Xiaojuan Ma from HKUST, Professor Christof Lutteroth from University of Bath, and Google research scientist Ruofei Du.

Welcome to my reality. I’d appreciate it if you enjoy any second of my work. Contact me if your interests collide with mine. Leave comments or email me: zhenyi.he@nyu.edu.

- 11/16/21: Our paper TapGazer: Touch Typing with Finger Tapping and Gaze-directed Word Selection is conditionally accepted in CHI 2022.

- 08/21/21: Our paper GazeChat: Enhancing Virtual Conferences with Gaze Awareness and Interactive 3D Photos is officially accepted in UIST 2021.

- 06/25/21: Our paper on gaze-based virtual conference is conditionally accepted in UIST 2021.

- 04/05/21: Our paper on render-based factorization is accepted in CASA 2021.

Research

GazeChat: Enhancing Virtual Conferences with Gaze-aware 3D Photos

This paper introduces GazeChat, a remote communication system that visually represents users as gaze-aware 3D profile photos. This satisfies users’ privacy needs while keeping online conversations engaging and efficient. GazeChat uses a single webcam to track whom any participant is looking at, then uses neural rendering to animate all participants’ profile images so that participants appear to be looking at each other.

Render‐based factorization for additive light field display

Augmented Reality (AR) and Virtual Reality (VR) applications enable viewers to experience 3D graphics immersively. However, current hardware either fails to provide multiple viewpoints like projectors or cause conflicts between vergence and accommodation like consumable headsets. Prior researchers have designed multi-view displays to solve the problem by enabling view-dependent images and focus cues. Nevertheless, it requires minutes to calculate one frame, which is critical for real-time AR/VR applications. In this paper, we propose a new render-based factorization for additive light field display and further improve the performance by optimizing the initialization of layers. We next compare our work with the state of the art and the results show that our solution has competitive results while the calculation takes less than 20 ms for each frame.

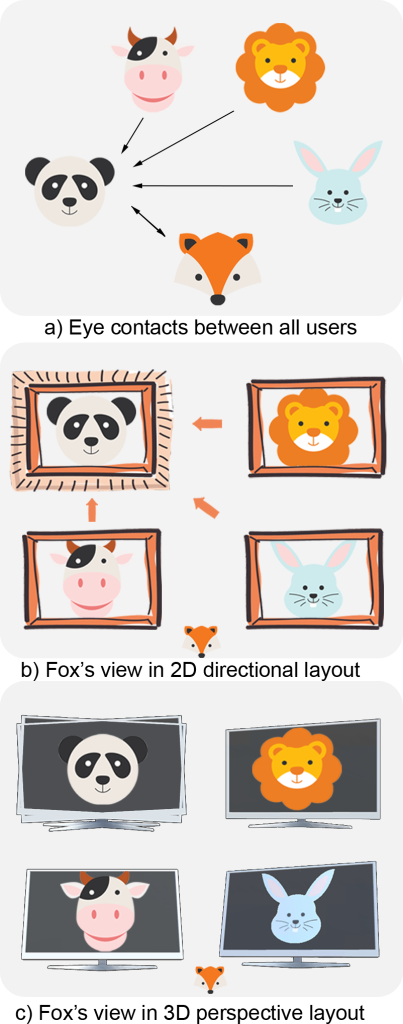

Augment Remote Small-Group Conversation through Eye Contacts Visualization

We introduce a web-based video conferencing system, which empowers remote users to identify eye contact and spatial relationships in small-group conversations. Leveraging real-time eye-tracking technology available with ordinary webcams, LookAtChat tracks each user’s gaze direction identifies who is looking at whom, and provides corresponding spatial cues. Informed by formative interviews with 5 participants who regularly use videoconferencing software, we explored the design space of eye contact visualization in both 2D and 3D layouts. We further conducted an exploratory user study (N=20) to evaluate LookAtChat in three conditions: baseline layout, 2D directional layout, and 3D perspective layout.

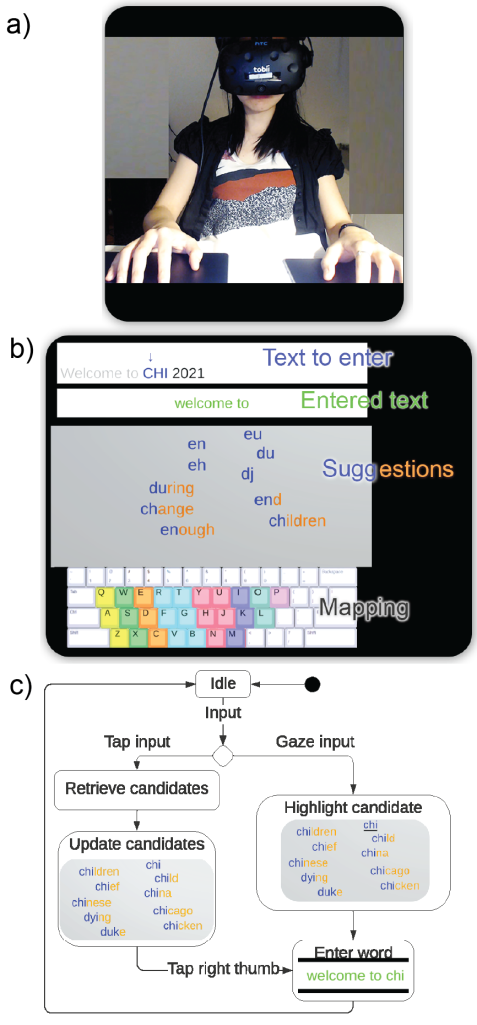

TapGazer: Touch Typing with Finger Tapping and Gaze-directed Word Selection (Ongoing)

In the years to come, smartphones may be replaced by inexpensive and lightweight eyeglasses capable of displaying augmented reality. Ideally those future glasses will incorporate gaze detection, and will also track hand and finger movements. Wearers of those glasses should then be able to type text simply by tapping their fingers on any surface.

In anticipation of this future, we present TapGazer, a text entry system in which users type by tapping their fingers, without needing to look at their hands or be aware of their hand position. Ambiguity is resolved at the word level, by using gaze for word selection. In the absence of gaze tracking, disambiguation can be effected by additional taps.

User studies show that TapGazer works with different devices and is easy to learn, with beginners reaching 52.17 words per minute on average, achieving 77% of their QWERTY typing speed with gaze tracking and 58% without.

CollaboVR: A Reconfigurable Framework for Creative Collaboration in Virtual Reality

In this paper, we present CollaboVR, a reconfigurable framework for both co-located and geographically dispersed multi-user communication in VR. Our system unleashes users’ creativity by sharing freehand drawings, converting 2D sketches into 3D models, and generating procedural animations in real-time. To minimize the computational expense for VR clients, we leverage a cloud architecture in which the computational expensive application (Chalktalk) is hosted directly on the servers, with results being simultaneously streamed to clients. We have explored three custom layouts — integrated, mirrored, and projective — to reduce visual clutter, increase eye contact, or adapt different use cases. To evaluate CollaboVR, we conducted a within-subject user study with 12 participants. Our findings reveal that users appreciate the custom configurations and real-time interactions provided by CollaboVR. We will open source CollaboVR to facilitate future research and development of natural user interfaces and real-time collaborative systems in virtual and augmented reality.

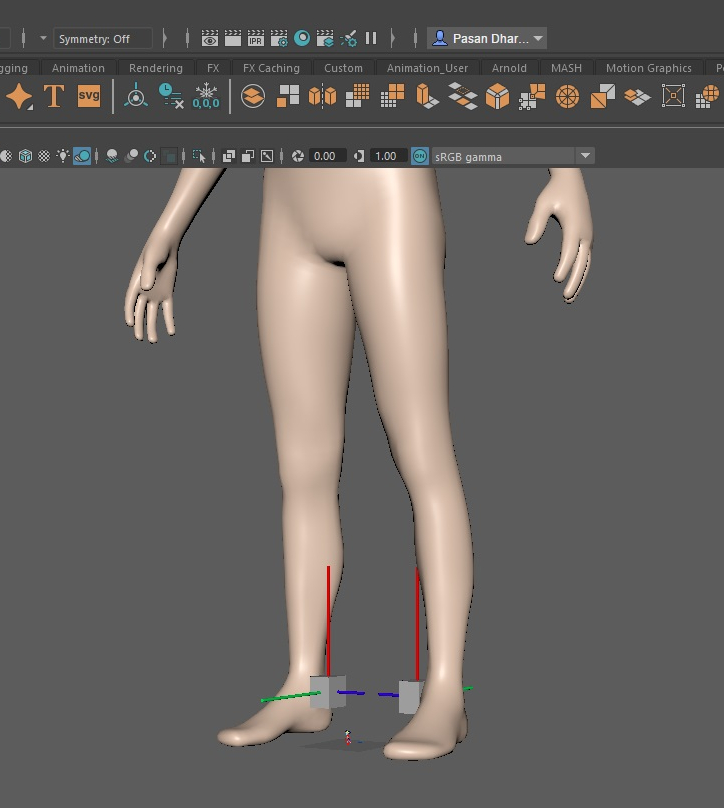

Inside-out Tracking Full-body Avatar(Ongoing)

We provide a novel machine-learning approach to reconstruct human body from only inside-out tracking devices.

Avatar Representation in VR Video Conferencing (Intern Project)

We implement a 2D video image representation and a 3D avatar representation for comparison. To solve the uncanny feeling of 2D video image in VR, we designed no-change, cropped, segmented, frame, and portal. We applied social presence index framework for an on-going user study.

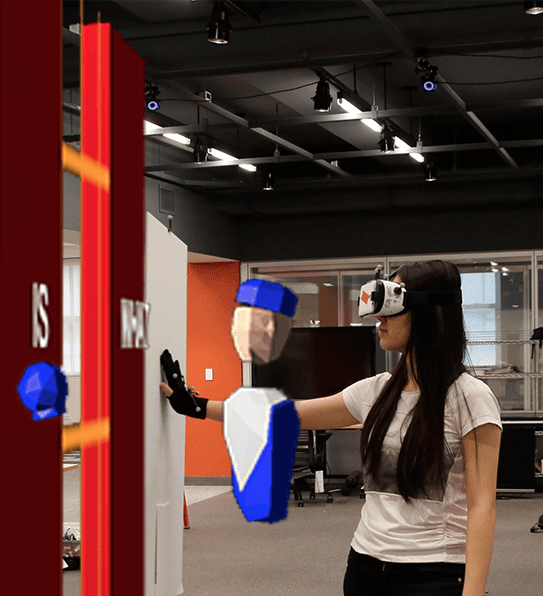

Exploring Configuration of Mixed Reality Spaces for Communication

We present our MR multi-user workstation system to investigate how different configurations of participants and content affect communication between people in the same immersive environment. We designed and implemented side-by-side, mirrored face-to-face and eyes-free configurations in our multi-user MR environment.

Handwriting in VR (Intern Project)

We propose a handwriting approach with haptic feedback in VR for surface writing. Users can either hold a regular pen or conduct a pinch gesture in VR to perform writing tasks. Gestures are detected by the head mounted cameras with learning algorithms and surfaces are detected with markers.

Manifest the Invisible: Design for Situational Awareness(SA) of Physical Environments in VR

Zhenyi He, Fengyuan Zhu, Ken Perlin, Xiaojuan Ma, Arxiv 2018.

Five representational fidelities (indexical, symbolic, and iconic with three emotions, positive, neutral, and negative) for displaying ambient entities in VR based on the existing aesthetic theory are designed, and the efficacy of these designs are explored in terms of raising users’ SA while preventing breaks in virtual presence.

Talk as a representative of Ken in Global Cre8 summit

Chalktalk VR/AR is a paradigm for creating drawings in the context of a face to face brainstorming session that is happening with the support of VR or AR. Participants draw their ideas in the form of simple sketched simulation elements, which can appear to be floating in the air between participants. Those elements are then recognized by a simple AI recognition system, and can be interactively incorporated by participants into an emerging simulation that builds more complex simulations by linking together these simulation elements in the course of the discussion.

HoloKit, the low cost open source mixed reality experience, which includes the HeadKit cardboard headset and TrackKit software.

With your smartphone and Mixed Reality apps, HoloKit provides you access to the world of Mixed Reality right in your hands, affordably.

I helped create several demos for the release.

PhyShare: Sharing Physical Interaction in Virtual Reality

A new approach for interaction in VR using robots as proxies for haptic feedback. This approach allows VR users to have the experience of sharing and manipulating tangible physical objects with remote collaborators.

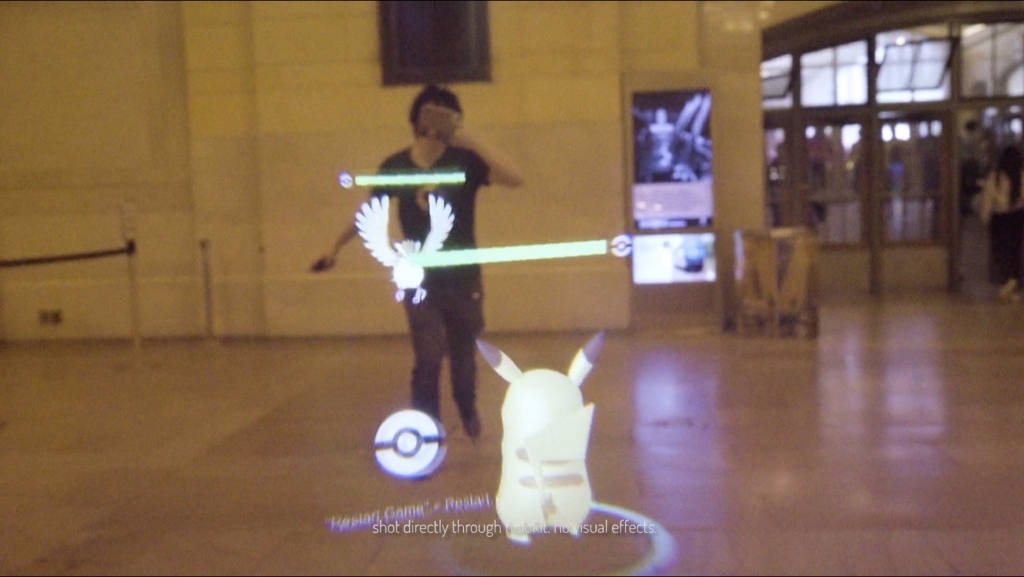

Holojam is a shared immersive experience. Each participant puts on a lightweight

wireless motion-tracked GearVR headset as well as strap-on wrist and ankle markers. These devices allow them to see everyone else as an avatar, walk around the physical world, and interact with real physical objects. Participants see the physical world around them, but it is visually transformed. People can draw in the air and collaborate by freely mixing physical and virtual objects.

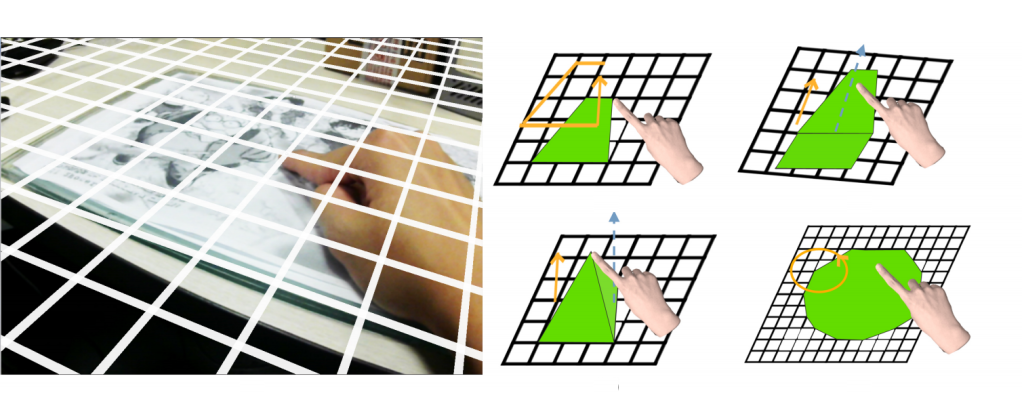

Object Creation Based on Free Virtual Grid with ARGlass

Zhenyi He and Xubo Yang. 2014. In SIGGRAPH Asia 2014 Posters (SA ’14).

A homemade AR eyeglasses is created by cameras, leap motion and Oculus VR and

a new approach called Free Virtual Grid is designed to help create 3D models. User could rotate the free virtual grid and scale the square size to moderate the orientation and precision.

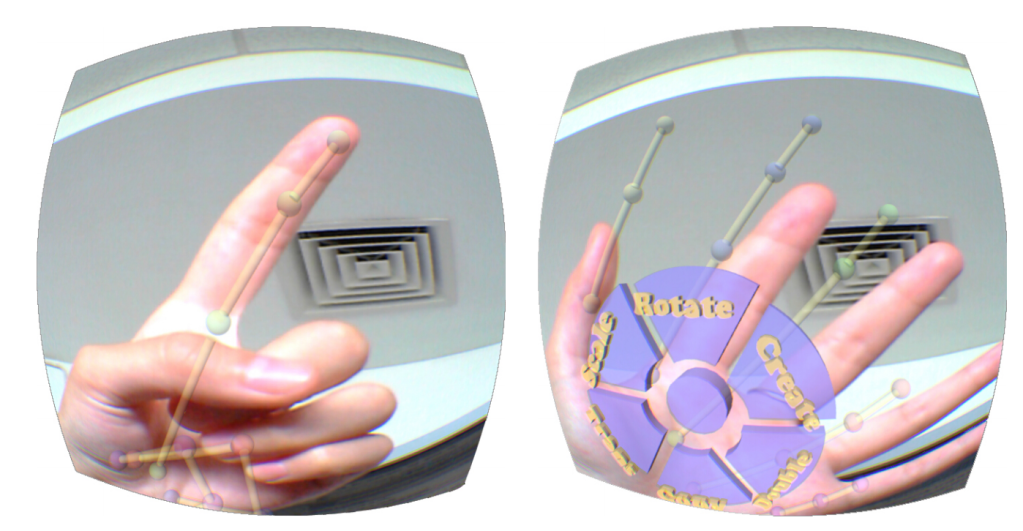

Hand-Based Interaction for Object Manipulation

Three types of 3D menus placement with AR eyeglass, and unimanual and bimanual hand-based interaction for basic manipulation of virtual objects are designed.

Exhibition Experience

- The outpost in SIGGRAPH 2020

- ChalktalkVR in Oculus Education in Oculus Connect 6, 2019

- ChalktalkVR in Facebook, 2019

- CAVE in SIGGRAPH 2018

- HoloDoodle in SIGGRAPH 2017

- Holokit in AR in Action, 2017

- Chalktalk in Global Cre8 Summit in Shenzhen, 2017

Professional Experience

Software Engineer Intern @ Facebook AI Face Tracking.

Full-time intern, June 2019 – August 2019

Research Intern @ Oculus Pittsburgh.

Full-time intern, June 2018 – August 2018,

Part-time intern, October 2018 – December 2018.

Academic Service (Program Committee)

Associate Chair (AC) for CHI2020 Late Breaking Work

Associate Chair (AC) for CHI2020 Late Breaking Work

Academic Service (Reviewer)

- CHI 2019, 2020, 2021

- SIGGRAPH ASIA 2019

- UIST 2019, 2020

- IEEE VR 2019

- ICMI 2020

- VRST 2018, 2020

- Eurohaptics 2020

- ISS 2020

Teaching Assistant

- Computer Graphics 9/2017 — 12/2017 and 9/2016 — 12/2016

- Human Computer Interaction and Interface 2/2012 — 6/2012 and 2/2013 — 6/2013

Received Outstanding Teaching Assistant Award - Programming and Data Structure 9/2012 — 1/2013 and 2/2013 — 6/2013

Awards and Honors

- The 2017 Snap Inc. Research Fellowship (top 12), 2017.

- Merit Students from the university(top 3 in dept), 2014.

- Outstanding League Leader, 2013.

- Outstanding Teaching Assistant, 2012.

- Shanghai Outstanding Undergraduate Student(top 6 in dept), 2012.

- Outstanding League Member, 2011.

- Merit Students from the university, 2010.

- Advanced Individual from the university, 2010.

- Third Grade of CASC Airplane Scholarship(top 10 in dept), 2013.

- People’s Scholarship Grade B from the university, 2011.